Manna model¶

The Manna model is similar in concept to the BTW model. However, where BTW dissipates its “sand grains” deterministically, the Manna model introduces some randomness. Let’s take a look:

[3]:

from SOC import Manna

model = Manna(L=3, save_every=1)

model.critical_value

[3]:

1

This means that the model begins toppling its “sandpiles” once we put two grains somewhere. Let’s try that:

[20]:

model = Manna(L=3, save_every=1)

model.values[2,2] = 2

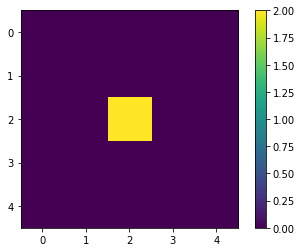

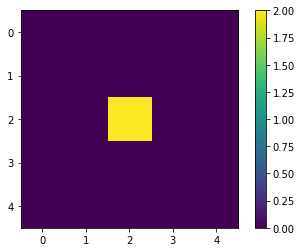

model.plot_state(with_boundaries=True);

model.AvalancheLoop()

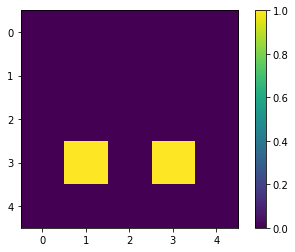

model.plot_state(with_boundaries=True);

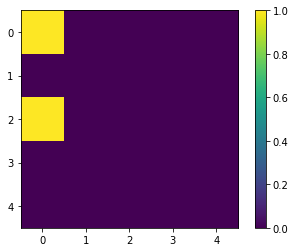

Why these two target locations in particular? It actually is random! Let’s rerun that:

[21]:

model = Manna(L=3, save_every=1)

model.values[2,2] = 2

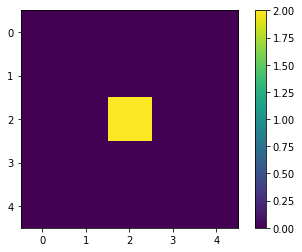

model.plot_state(with_boundaries=True);

model.AvalancheLoop()

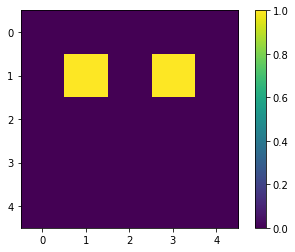

model.plot_state(with_boundaries=True);

[22]:

model = Manna(L=3, save_every=1)

model.values[2,2] = 2

model.plot_state(with_boundaries=True);

model.AvalancheLoop()

model.plot_state(with_boundaries=True);

Oh, that’s a bit weird, isn’t it? These seem to have moved awfully far. The trick is that the two grains that fall from the toppling location pick their location at random independently, and here they both picked (1, 1) at first.

Let’s run it for some more time:

[25]:

model = Manna(L=5, save_every=1)

model.run(1000)

model.animate_states(notebook=True)

Waiting for wait_for_n_iters=10 iterations before collecting data. This should let the system thermalize.

Let’s run a larger simulation instead:

[37]:

model = Manna(L=10, save_every=1)

model.run(1000)

model.animate_states(notebook=True)

Waiting for wait_for_n_iters=10 iterations before collecting data. This should let the system thermalize.

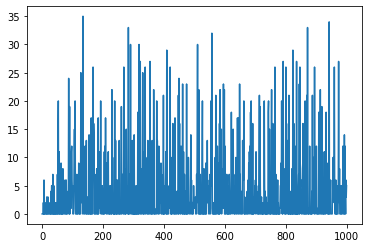

If you look closely, you’ll see that the system begins to exhibit very large avalanches very soon:

[38]:

model.data_df.AvalancheSize.plot()

[38]:

<matplotlib.axes._subplots.AxesSubplot at 0x7f2828870050>

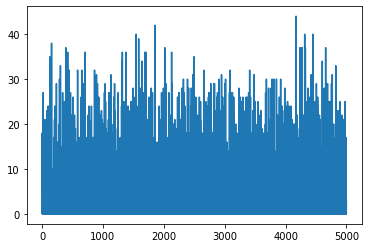

Let’s take a look at how modifying the critical value affects the simulation. We’ll do some more iterations, so the system has the opportunity to “fill up” better. We’ll also skip some animation frames.

[47]:

model = Manna(L=10, critical_value=4, save_every=10)

model.run(5000, wait_for_n_iters = 1000)

model.animate_states(notebook=True)

Waiting for wait_for_n_iters=1000 iterations before collecting data. This should let the system thermalize.

[48]:

model.data_df.AvalancheSize.plot()

[48]:

<matplotlib.axes._subplots.AxesSubplot at 0x7f281fc7fb90>

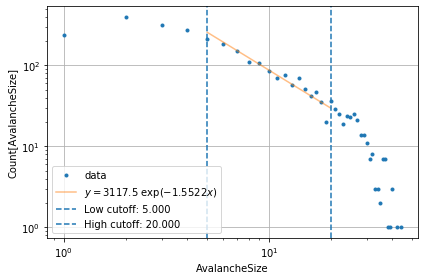

Note how the avalanche size grows until a certain period, and then starts to fluctuate randomly at pretty large values. We can try to investigate the histogram of those avalanche sizes. We’ll also fit a line to the linear segment (picked purely subjectively, visually and heuristically).

[53]:

model.get_exponent(low=5, high=20)

y = 3117.481 exp(-1.5522 x)

[53]:

{'exponent': -1.552219845297347, 'intercept': 3.4938038036304393}

One thing for sure, there is a region where the scaling in log-log scale is linear. The line fits rather well.

Let’s run a larger simulation and try to estimate the scaling exponent. We’ll wait for a good while so that the system can thermalize well:

[61]:

model = Manna(L=40, save_every=100)

model.run(100000, wait_for_n_iters=50000)

Waiting for wait_for_n_iters=50000 iterations before collecting data. This should let the system thermalize.

[62]:

model.animate_states(notebook=True)